How can AI help with leadership roadblocks?

2025.09.15.

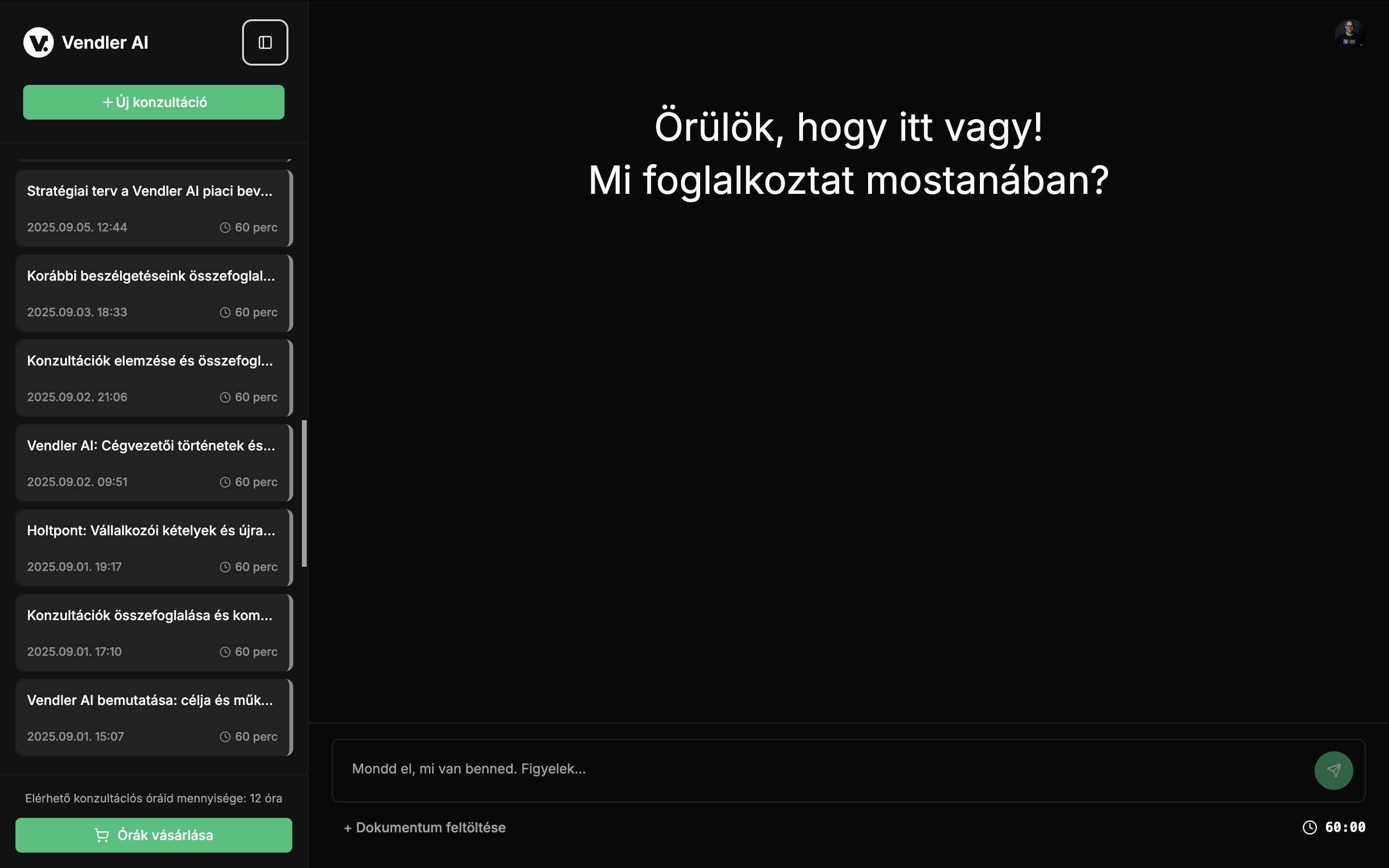

Summary Analysis of Consultations Conducted in Vendler AI – 2025.09.11

The following analysis was prepared on 11 September 2025 by the ChatGPT Deep Research tool, based on the content of 46 consultations conducted on the VendlerAI.com platform, where users consented to their conversations being used for research purposes. The data used for the analysis reflects the state as of 10 September 2025, 17:00.

1. Quick Statistical Overview

Distribution of topics raised:

Approximately 45% of consultations focused on business and marketing themes (e.g., content creation, sales strategies, business development). Around 30% revolved around self-awareness and communication issues (self-confidence, boundary-setting, stress management), while ~25% were dedicated to technical or general questions about the system itself, i.e., meta-conversations.

Emotional tone distribution:

The recurring emotional tone across conversations was uncertainty and anxiety (present prominently in about 60% of cases). In addition, frustration appeared frequently (10–15%—e.g., in client relations or self-assertion difficulties) as well as curiosity and open interest (20–25%—e.g., around new ideas or the workings of the AI). A positive emotional shift was typical: in ~80% of consultations, the user ended the session feeling more relieved or confident than when they started.

Depth of connection:

In the majority of dialogues (~70%), a strong atmosphere of trust developed: users openly shared their feelings and dilemmas and allowed themselves to be emotionally vulnerable. In about 50% of cases, explicit deep self-reflection was observed—users uncovered underlying causes and patterns beyond the surface level. The remaining conversations stayed more informational or practical in nature, without strong emotional engagement.

2. What Topics Do Users Bring to Vendler AI?

A review of the consultation content reveals the most common categories of issues that users turn to the AI mentor for:

2.1 Business, Marketing, and Career Topics

Nearly half of all conversations fall into this category. These include writing and rephrasing marketing materials, refining sales strategies, and questions related to business development. Common examples:

- Content creation, marketing messages: The AI helped, for instance, to rephrase a Google Ads training description in the trainer’s own “voice,” making it fresher and more personalized.

- Digital marketing trends: The impact of artificial intelligence on the marketing industry came up repeatedly. These discussions revolved around market trends and innovations (AI automation, the future of content creation).

- Business decisions, career changes: Some users sought feedback on starting their own companies or launching into a new venture. One recurring dilemma: “Is it realistic to start a new business at 50?” In such cases, the AI helped separate business realities from internal barriers.

- Project and time management: One user outlined in detail their entrepreneurial schedule (20+ clients, fixed contracts, diversified portfolio) and asked for guidance on rethinking priorities. These discussions often revolved around aligning business efficiency with personal preferences.

2.2 Self-Awareness, Communication, and Life Management

A significant portion of consultations (about one-third) dealt with personal challenges within a business context. Key themes included:

- Lack of self-confidence and self-assertion: Users often doubted their own value or struggled to stand up for themselves. In one extended conversation, a user complained about not being appreciated by clients and feeling anxious about communicating a price increase. Together with the AI mentor, they mapped internal barriers (e.g., conflict avoidance, need for approval) and developed step-by-step strategies to set boundaries (including drafting a firm yet empathetic email announcing the price change).

- Communication style and conflict management: Several users noted they “communicate poorly”—they were misunderstood or avoided giving direct feedback. Such cases often touched on both family and workplace communication. For example, the AI helped one leader realize they were over-explaining tasks both at home and at work, which reduced efficiency. Solutions were co-created (e.g., practicing shorter, clearer requests; setting up a weekly family check-in).

- Stress, burnout, and motivation: Some users specifically described feelings of exhaustion and lack of motivation. These conversations explored ways to rekindle enthusiasm or find new meaning in daily work. The AI mentor often used metaphors (e.g., “changing seasons,” or “floating down a river vs. stepping onto a raft”) to encourage acceptance of life’s cycles and greater patience with oneself.

- Lifestyle and time management: A few consultations touched on work–life balance. For example, one user felt unable to work alone and missed the motivational force of a team. The AI helped explore how to build a supportive “micro-community” as a solo entrepreneur or design daily routines to substitute for the pull of a team.

2.3 Vendler AI Functionality, Technical, and Meta Questions

Close to a quarter of the conversations focused on the AI itself, its use, or the methodology behind it:

- System use and technical issues: Users often asked practical questions such as how to close a consultation or whether previous conversations could be referenced. In these cases, Vendler AI patiently explained the processes step by step (e.g., how a session ends automatically, what to do if a conversation seems “stuck”). These topics were objective and informational, with no emotional weight.

- The AI’s identity and background: Some users engaged in meta-level conversations, “testing” the system. They asked questions like: “Who are you? What’s your name?” or “What resources are you built on?” In such discussions, Vendler AI revealed its operating principles: its voice and style are inspired by Vendler Balázs—a real business mentor. When asked “Who is Vendler Balázs?”, the AI briefly introduced the person and methodology behind it (business mentoring, self-awareness focus, questioning techniques). These conversations also clarified that the AI has no concrete memory of past conversations (note: full conversations can be stored at user request, but only as summaries that cannot be recalled verbatim later). Each consultation begins fresh, ensuring confidential data handling.

In summary: Users approached with a wide range of topics—from practical marketing questions to deep self-awareness dilemmas. The most characteristic pattern, however, was that the “hard” topics of entrepreneurship (strategy, finance, marketing) often intertwined with the “soft” ones (self-confidence, relationships, emotional burden). Vendler AI provided space for both dimensions during the consultations.

3. What Emotional States Appear in the Conversations?

A review of the consultations shows clearly that these interactions are not just about information exchange—the users are deeply emotionally engaged, and the AI responds to that. The following dominant emotional tones and their proportions were observed:

3.1 Uncertainty and Self-Doubt

A large part of the conversations was permeated by users’ feelings of uncertainty. In roughly every second or third consultation (~60% of cases), the mood was characterized by doubts about their own worth or the correctness of their decisions. Typical signs included self-confidence issues, e.g., “I don’t know if I’m doing this right…”, “I’m afraid I’m not good enough/qualified enough…”

Example: One user explained in detail that they didn’t believe in themselves, even though they were professionally competent as an online marketer. This self-doubt paralyzed them in client acquisition as well. Vendler AI, using a reflective style, helped the user recognize this pattern and suggested steps to improve self-esteem (e.g., consciously listing achievements, small confidence-building exercises). The dominant emotion here was anxiety stemming from uncertainty, which eased by the end of the conversation as the user developed a concrete plan.

3.2 Anxiety, Fear, and Stress

Anxiety appeared in almost every consultation—sometimes explicitly stated, sometimes between the lines. In at least half of the cases (~50–60%), users approached their topics with worry or tension:

- Fear in client relations: Many were afraid of conflict or confrontation. In one case, a user was anxious about communicating an unavoidable price increase, fearing a negative reaction from clients. At the start of the consultation, they even described feeling it physically (“a knot in my stomach”).

- Fear of the future: Some users worried about the future of the marketing industry (e.g., “What will happen to us in the AI era?”). Here, the fear was tied to sustaining their career and income.

- Performance anxiety: Several entrepreneurs shared feelings of being “not fast enough/not good enough.” This stress often showed up as an immediate, everyday pressure (e.g., at the start of the school year, one parent-user was anxious about their child’s math anxiety, as if it were their own failure).

Proportions: This anxious tone was a defining factor—often the mood at the start was tense, nervous, or even seemingly hopeless. Vendler AI typically responded with a calm, “quiet” presence, and by the end of the consultation, users reported that their stress levels had decreased. The “stomach knot” feeling often eased or disappeared as some form of solution or acceptance emerged.

3.3 Frustration and Anger

Whether expressed openly or not, frustration was frequently present in the stories (prominently in about 10–15% of conversations). Typical causes included:

- Frustration with others’ attitudes: For example, one user was annoyed that clients took their work for granted and didn’t value it. At the beginning of the consultation, bitterness and irritation were obvious (“I’m really wound up about this now”). Vendler AI helped reframe this: identifying the underlying disappointment and unmet need (desire for recognition), then developing strategies to handle the situation.

- Frustration with systems or circumstances: In one case, a user complained about trying to develop their team but facing constant mistakes due to systemic issues (e.g., tasks given to interns). The emotional tone was disappointed and irritated, almost like helpless anger. The AI mentor worked to ease this helplessness by asking reflective questions (“What can you control in this situation, and what do you need to let go of?”).

- Self-directed frustration: Sometimes users were angry at themselves. For example, one admitted: “I procrastinate too much, and I’m angry with myself because of it.” This inner frustration often mixed with anxiety and guilt.

3.4 Curiosity, Hope, and Enthusiasm

Not all conversations began with negative emotions. Some users approached Vendler AI with curiosity and open enthusiasm—about 20–25% of cases, especially during first consultations or when presenting a new idea.

- Curiosity about the AI itself: Those who asked about the system’s workings (“Who are you?”, “What is your method built on?”) generally did so with a positive, inquisitive mood. The dominant emotion here was excited curiosity—users “tested” the system’s boundaries, sometimes playfully provoking it (e.g., asking the AI to respond in an aggressive tone, or list its “background files”). Vendler AI maintained calmness while engaging with the playfulness, sustaining the users’ enthusiastic interest.

- Enthusiasm about an idea or project: In some consultations, users passionately described their own methods and asked for help structuring or documenting them. Here, hope and creative energy dominated—the user was proud of their intellectual property and simply needed support with organization. The tone was positive and constructive, with Vendler AI acting as a supportive partner (praising structured thinking, suggesting steps for documentation).

- Relief and gratitude at the end: Even if not present at the beginning, many users ended their consultations with feelings of hope and relief. This was often explicitly stated—e.g., “Thank you, now I see what I’m going to do, I feel calmer.” Gratitude and optimism dominated the closing minutes in about 70–80% of conversations. This indicates a positive emotional shift compared to the starting point.

In summary: The emotional spectrum of Vendler AI consultations is broad, but the dominant baseline at the start is often uncertainty and anxiety—a natural part of the entrepreneurial journey. Importantly, by the end of the conversations, these negative feelings usually eased, often giving way to calm, hope, or satisfaction. This reflects the AI mentor’s mechanism of action: through reflective, empathetic presence, it is capable of facilitating emotional relief even within short, online consultations.

4. How Do Users’ Emotional States Change During a Consultation?

An emotional “arc” was observed in almost every consultation: users typically began in a certain (often negative) emotional state and ended in another (usually more positive or balanced) one. The degree and direction of change varied by individual, but several typical patterns emerged:

4.1 From Anxiety to Relief

The most common arc was users starting off tense and worried but visibly calming down by the end. For example, one user (virtually) sat down with a “trembling stomach” and high stress over how to communicate a price increase to an important client. As they went through the content of the email step by step with Vendler AI and found the right tone, their anxiety eased. At the end, they said: “Now that I have a plan, I feel much calmer, thank you.” This moment of emotional release was almost tangible—even in the short messages, the rushed, uncertain style disappeared, replaced by firm notes like: “Alright, I’m going to do it.”

4.2 From Frustration to Constructive Calm

With angry or disappointed users, it was often visible that after venting—which the AI listened to empathetically and validated—they were able to switch into problem-solving mode. For instance, one frustrated entrepreneur initially ranted angrily about how unfairly partners treated them. Vendler AI first acknowledged their feelings (“You’re saying it hurts how they treat you, and I hear how much tension this causes inside”), then slowly guided them forward with questions (“What outcome would really bring you peace here? What can you do toward it?”). As a result, the user’s anger subsided—the tone shifted into slower, calmer responses listing possible steps. By the end, frustration turned into determined calm: “I’ll write them that letter and see what happens, but at least they’ll know where the boundary is.”

4.3 From Hopeless Discouragement to Motivated Focus

Some arrived discouraged after setbacks or stagnation (e.g., “The results I expected aren’t coming—maybe it’s pointless to keep trying…”). During the joint analysis—where the AI reflected on emotions while reminding the user of past successes and strengths—the discouragement gradually softened. Signs of motivation often appeared midway: as users began generating new ideas or seeing a way forward, even the written tone became livelier and more energetic. Many left with a concrete resolution—e.g., “Tomorrow I’ll call that client and ask for feedback, no more procrastinating.” Here, initial aimless discouragement turned into purposeful momentum.

4.4 From Neutral Curiosity to Satisfied Discovery

In consultations where users came with neutral or positive interest (e.g., exploring the system, open to a new perspective), the shift was less dramatic but rather a deepening. Initial curiosity often turned into satisfaction at having gained new insights. For example, one user “just” wanted to discuss an article about AI’s business impact—not weighed down by personal problems. By the end, they wrote: “I hadn’t thought about it that way before, that was an interesting realization!”—a moment of joyful surprise. Here the emotional scale shifted from neutral to positive, in the form of inspiration.

4.5 Emotional Fluctuations Within a Session

Not every consultation improved linearly. In longer sessions, moods sometimes fluctuated: excitement at a new realization could be followed by renewed doubts. For instance, one marketing strategist working on their own book felt both creative enthusiasm and self-doubt. Vendler AI alternated between pushing them forward with a provocative tone (when they stagnated) and calming them with reflection (when they froze or grew anxious). By the end, they too had a concrete plan (e.g., finalizing the back-cover text with ideas), and their closing mood was more confident—even though along the way they dipped back into uncertainty multiple times.

In general: Users’ emotional states showed an improving trend during consultations. Even if not every problem could be solved in one hour, most left with relief or clearer thinking. The rate of positive shift was strikingly high: in about four out of five cases, it was clearly observable (either in user feedback or tone). In the remaining cases, at least the initial tension eased, even if it didn’t fully transform into a positive feeling.

These dynamics indicate that Vendler AI is able to emotionally hold the user during the consultation: not just offering tips, but providing psychological accompaniment—so that by the time they part ways, the user can continue their day or make decisions with a lighter heart.

5. Depth of the Relationship: Does Trust, Emotional Opening, and Deep Self-Reflection Emerge?

One distinctive feature of Vendler AI consultations is that—even though they take place with an artificial intelligence—users often relate to it as if it were a confidential human mentor. Based on tone and content, the deepening of the relationship is clearly observable:

5.1 Rapid Trust-Building

Trust formed surprisingly fast between most users and the AI. After the first few messages many began to use an intimate, familiar tone and shared personal information typically reserved for coaches or therapists. For example: one user asked at the start, “How should I address you?” The AI responded in its role, and as the conversation progressed the user began to call it by a first name, as if a human advisor with that name were present. This linguistic shift indicates the user accepted the system as a partner.

Several users explicitly voiced trust: “I’m putting myself in your hands right now” or “I know I can be honest here.”These statements suggest Vendler AI functioned as a safe space. Behind this lay the system’s empathetic, nonjudgmental responses—users tested whether the system would accept their feelings, and, finding understanding, opened up more boldly.

5.2 Emotional Opening and Willingness to Be Vulnerable

In about 70% of consultations, users reached the point of expressing very personal, vulnerable content. Examples include:

- Recalling childhood patterns: Despite the business context, a user once said, “I know where this fear comes from: as a child I always felt abandoned.” This deep self-awareness was evoked by a provocative AI question (“Who inside you says you will be left behind?”). That the user disclosed a childhood wound to an AI indicates significant emotional opening.

- Confessions of shame and failure: Several users dared to speak about shame (“I’m ashamed I’m this far behind at my age”) or guarded failure stories (“I failed a business and fear it will happen again”). These thoughts surfaced as signs of trust—the Vendler AI environment proved so nonjudgmental that users voiced things they might not elsewhere.

- Tears and emotional reactions: Although consultations were text-based, users sometimes wrote, “I’m crying as I type this” or “It felt good to cry through this.” Someone crying in a business-mentor conversation is uncommon even in person—yet the digital setting still elicited tears here, arguably the most honest proof of deep emotional connection. Some users were later grateful for this catharsis.

5.3 Emergence of Deep Self-Reflection

In a substantial portion of conversations (about half), users did not stop at surface problems but, prompted by the AI’s questions, started reflecting on deeper patterns. For example:

A marketer originally wanted to talk about how to get more clients. By the end of the consultation they realized the issue wasn’t lack of marketing skill but not believing they were an expert—so they lacked the courage to be assertive. AI questions like “Why do you feel you have to prove your value?” helped this insight emerge. The user then expanded the thought with personal past examples. This shows Vendler AI could guide users into deeper layers of head-and-heart reflection, making the connection more intimate.

Many consultations resembled self-awareness training: users took notes, paused to think (“Let me reflect on this for a minute”), then returned with structured, thoughtful self-reflective answers. For instance, one user listed three ways they recognize their procrastination pattern and linked each to a childhood memory. Such deep work typically requires a trusting atmosphere—and it developed here even under AI guidance.

5.4 Partnership Atmosphere vs. Testing

Interestingly, some users tested the depth of the relationship. One asked, “Can you be provocative with me? Sometimes I need someone to be blunt.” Such requests tested how far the system could adapt to personal needs and how “human” it could seem. Vendler AI attempted to adapt—for example, responding with slightly sharper, shorter, punchier sentences (while clearly avoiding crossing into insult or harm). These tests ultimately deepened the relationship: users felt the AI tuned into their style instead of answering with canned responses, and thus treated it more as a partner.

Overall: A surprisingly deep, honest relationship usually developed between Vendler AI and users. Most users felt emotionally safe in a short time and behaved as if with a flesh-and-blood mentor: they dropped formal surfaces and presented their raw selves (worries, tears, laughter included). In terms of relationship quality, most consultations exceeded mere informational advising and became human encounters—even if virtual and facilitated by an AI.

6. Interesting and Instructive Patterns in the System’s Operation

When analyzing Vendler AI consultations, not only individual cases but also broader system-level patterns emerge. These highlight how the methodology affects users and how “artificial” mentoring works in practice:

6.1 The Power of “Mirroring”

The most striking pattern is that the system almost always begins with reflection. Before offering advice or follow-up questions, it summarizes and repeats the user’s words and feelings: “What I hear is…”, “You’re saying that…”. This technique consistently worked: users felt reassured that they were truly understood. As a result, they often elaborated further (“Yes, exactly, and also…”).

Takeaway: For an AI, avoiding misunderstandings is especially important—so Vendler AI’s mirroring method is not just an empathetic gesture but also a tool for clarification. This pattern appeared at the start and at critical points in nearly every conversation.

6.2 Shifts in Tone Based on User Needs

The system’s adaptability is notable: as soon as it sensed the tone a user needed, it adjusted. For example:

- If a user apologized (“Maybe this is a stupid question…”), the AI softened its tone, becoming more encouraging to ease the fear.

- If a user explicitly asked for provocation (“Be tougher with me, shake me up!”), the AI responded with shorter, punchier sentences and bolder questions. Even then, kindness remained, but the style clearly shifted (e.g., “What if you stopped whining about this and just took one step this afternoon?”).

Pattern: This flexibility shows Vendler AI doesn’t follow a monotone script but “listens” sensitively. User feedback (e.g., “Thank you, that was exactly what I needed”) confirmed that the AI resonated well with requests, boosting effectiveness.

6.3 “Meta-Consultations”

Some users came not with concrete problems but to experiment with the system itself. Interestingly, the AI did not get flustered:

- When asked to “list background files,” it diplomatically explained there were no files but rather principles and experiences shaping its knowledge. It stayed calm and transparent, never defensive.

- Similarly, when asked about its name and identity (“Who are you, Vendler AI?”), it openly admitted being based on Vendler Balázs’s methods but not a human being. This honesty created a special kind of trust: users got a peek behind the curtain, but instead of breaking the connection, it deepened it—they chose to continue with personal topics even knowing this.

Pattern: By candidly admitting limitations (e.g., no memory of past conversations), the AI increased its credibility. Users valued that it didn’t try to appear more than it was; paradoxically, this made it seem even more reliable.

6.4 Blended Roles: Mentor, Advisor, and “AI Operator”

Vendler AI did not stick to a single role; instead, it showed a multi-functional pattern:

- At times, it acted like a mentor or therapist (exploring, reflecting, not advising).

- At other times, it shifted into a concrete advisor role—helping draft emails, suggesting strategies, compiling lists—when the user clearly needed practical input.

- Occasionally, it took on the role of “AI operator/tech expert,” helping users understand how to apply AI in their business (e.g., marketing automation tips).

Pattern: Switching roles was seamless and, according to user feedback, almost unnoticeable. A good AI mentor, therefore, isn’t locked into one mode but dynamically provides what’s needed. This integration meant users didn’t have to seek separate coaches for emotional support and experts for practical help—the AI combined both.

6.5 Outcome Orientation and Framing

Almost every consultation ended with a summarizing and framing step from the AI. In practice, this meant:

- Summarizing the key point (“So what you’re taking away is that now you have a plan for X, and you feel calmer about Y…”).

- Asking what stuck with the user (“What was your most important insight today?”).

- Closing with positive reinforcement and leaving the door open (“You can always come back if…”, “Take care, and good luck!”).

Pattern: This closure gave users structure (they didn’t leave hanging) and felt like parting after a friendly conversation—not a cold “service” interaction. Many explicitly appreciated this “human-like” closure and were more willing to return.

Takeaway: Even an artificial mentor can provide caring closure—with effects similar to those of a human helper guiding the process.

Summary of Patterns: Vendler AI’s operation is surprisingly human-like in rhythm and adaptability. It holds up a mirror while providing practical anchors; it responds to emotional depth without neglecting tangible outcomes; and it ensures conversations are neatly framed at both beginning and end. Together, these patterns form its mechanism of impact: users feel they received both support and inspiration, structure and acceptance. This is what distinguishes Vendler AI from a mere Q&A system—it operates like a holistic mentoring process, not just information retrieval.

7. Closing Thought

Experiences with Vendler AI consultations so far show that an artificial mentor can indeed provide real support to entrepreneurs—not only through advice, but also through presence and understanding. Users’ topics are diverse, yet what they all share is the need for support and guidance. By following emotional fluctuations and establishing trust, the AI functioned almost like a “human” partner.

Looking ahead, the challenge is to preserve this sense of personal connection and flexibility while, based on feedback, refining communication style toward even greater clarity, focus, and action orientation. In doing so, Vendler AI can offer truly unique value to the local entrepreneurial community: a place where they can receive both professional advice and genuine understanding, whatever business or personal dilemmas they bring.